This story is part of MOLD Magazine: Issue 04, Designing for the Senses. Order your limited edition issue here.

While studying in medical school just two decades ago, I learned that the five senses—touch, sight, hearing, smell and taste—were a set of systems working almost independently. But in the last ten years, new evidence has emerged about crossmodal interactions related to food perception. Since the dawn of modern science we’ve known that the act of eating engages the five senses for evolutionary reasons, to aid the survival of each individual organism of a species. More recent findings show that the five sensory modalities allow us to enjoy food and to create a whole imaginary world around each perceptual experience, reaching the emotional brain to give each experience a unique feeling. For this reason, food represents one of the most ideal subjects of study in terms of sensory integration, helping to drive neurophysiological research into multisensory perception.

The hallmark of the multisensory model of perception is crossmodal interactions. Every time you choose something to eat, your decision-making processing is triggered mainly by visual information. However, science can explain that vision is not just one sense, it is many senses. Food color activates neuronal circuits related with aroma, taste and tactile perception because those are learned associations across sensory inputs. This mechanism can be found interconnecting the other senses too. For instance, if the texture of a bread crust is not what the diner expects, let’s say less crispy, that bread could be perceived as less tasty, even if there is no real variation in terms of taste profile. The key to this example is that food texture also carries gustatory information because at a neuronal level, senses are cross-connected.

The way in which each of us builds perceptual reality is based on those crossmodal interactions that also determine our behavior as consumers. Following this idea, it could be thought that once those interactions are defined, they are unmodifiable, but surprisingly it’s possible to change them. Neuroplasticity is a well-known neurophysiological mechanism by which neurons can be reconnected throughout life, something that I characterized during my PhD studies about visual system development. A particular sensory input, like exposure to light or darkness, can completely change which kind of molecules are released by a niche of neurons, defining their cellular fate and interconnections. In other words, the brain has the ability to reorganize itself by establishing new synaptic connections depending on the stimuli. This neuroplasticity is in action, for instance, in the way a sommelier develops their ability to recognize a wide variety of subtly different aromas: by repeatedly smelling different volatile compounds they are establishing and reinforcing neuronal connections in their olfactory system.

However, the state of the art in multisensory perception is still mainly constrained to labs and the minds of a few avant-garde chefs and designers of eating experiences. Beyond that, most people are unaware of this new model of perception and the potential it offers.

Critically, there is a kind of standardized paradigm of sensory perception. This means that our sensory systems have been shaped—mainly by Western cultures—to enjoy food and the eating experience in a very structured way. Westerner palates tend to recoil from the slimy texture and potent aroma of Japanese nattō or the gesture of eating insects with your hands. Our brains are multisensory but, by a specific neuronal inhibitory mechanism, we discard that information by focusing our attention separately on each sensory modality. For instance, that standardization keeps us away from understanding food and culinary diversity around the world. This means that our liking of a food is just a reflection of synaptic pathways determined by repeated exposures to the same stimuli.

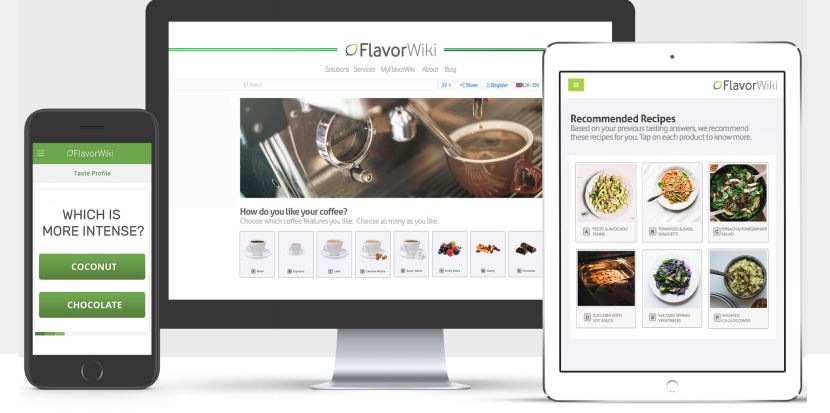

Moreover, as consumers in the modern world, we have always been treated as sensory equals, and this assumption has increased perceptual homogeneity among us. Food products are designed to satisfy the expectation of the average consumer. But people are unique and have unique perceptual thresholds. It’s not just a coincidence that there is now a growing demand for more complex and personalized experiences, which look set to shape the future of food consumption, consumer experience, sensory marketing and more. For instance, food packaging shows not just the ingredients but also the sensory profile and eating experiences diners can choose from tableware to ambience, sound and lighting.

Five years ago I came to Italy to work on a European Commission postdoctoral project for developing a digital olfactory biosensor, something like an artificial nose that could be used to detect unsafe volatiles or the pheromones that many living beings use for social and emotional communication. My work was to synthesize in vitro odor receptors and then by in silico mutation, customize them to bind targeted volatile molecules. The result was a prototype able to detect and recognize volatile compounds at levels so low that they are imperceptible to human noses. Now it is possible to develop a sensor capable of recognizing a group of selected compounds, and this growing technology can be applied for specific purposes like detection of potential environmental hazards or for early diagnosis by volatile markers in medical sciences.

However, technologies in digital olfaction are still quite incipient. The reason being that unlike other sensory systems, olfaction, along with gustation, is a chemical sense, meaning that molecule-receptor binding is required to trigger perception. Odor receptors are located along the olfactory epithelium—a stretch of tissue in the nasal cavity—and odor molecules can reach them in two ways. Odorants can enter the nose via the nostrils (orthonasal perception) or can be released in the oral cavity and reach the olfactory epithelium via the nasopharynx (retronasal perception). Whereas retronasal perception is more closely related with the release of volatiles from food being chewed in the mouth, the orthonasal route plays an important role in smelling in general, not just in food. And it is the latter which digital olfaction research is focused mainly on. But to create just one aroma, and deliver an olfactory message, it’s necessary to release a very specific set of odorants: although we tend to conceive of an aroma as a single aromatic compound, the reality is that to perceive “real” oranges, for instance, you need more than 20 volatile compounds. Thus, today, digital olfaction deals mainly with the issue of trying to mimic this complexity.

But if, or when, we see success, the possibilities are vast. Besides being useful for enjoying food and getting insight into the world around us, olfaction is intimately related to social communication and emotions: we choose our partners and recognize family members by body odors, and we are capable of evoking a forgotten memory just by smelling a particular scent. With this in mind, digital olfaction and other emerging technologies are investigating not just sensorial features but the potential to recreate emotional states. For instance, could we connect people to nature or engage people with environmental concerns by using olfactory stimulation? Olfactory artist Peter de Cupere, in his 2018 work Smoke Flowers, has been raising awareness about urban pollution by displaying real flowers that emit the scent of smog. In the future, this work could be explored through a digital interface by observers from all over the world. In addition, if we follow the direction of the new paradigm of perception—through multisensory education and exploration—we can see the possibility of rewiring brain circuits to get customized experiences beyond the current standardized model. Making use of the plasticity of human neuronal circuits, through repeated exposure to a new set of stimuli, one could train the brain to expand perception, and therefore sensory preferences, to help people improve their diets or rid themselves of unhealthy food consumption habits.

A deep understanding of multisensory perception is a matter of time. Nowadays there are many research groups working in this exciting field and its relevance to the future of food consumption, consumer experience, sensory marketing and so on. For instance, there is scientific evidence showing that sounds can modulate—for better or worse—taste and texture perception. This use of auditory inputs to influence orosensory perception, has gained attention in recent years from chefs and airlines wanting to offer food that is delicious despite the taste-suppressing noise of a flight. Furthermore, each sensory preference and multisensory interaction reinforces synaptic connections between neurons that can be measured by methods based on morphological, electrophysiological and behavioral analysis, which are the basis to unravel the crossmodal brain circuitry. Advanced computational technologies of mixed reality—with augmentation of both sensorial and virtual inputs—will help customize perception and create more dynamic and immersive experiences. The range of possibilities for neuroscience is huge, and only limited by our imagination.

We have been unwittingly trained to disown the sensory richness of food to the point of acquiring a certain degree of neophobia for culinary diversity. But by exploring the multisensory approach, there is a chance to learn another way in which our senses work. This is key to rediscovering the importance of food and nature, to addressing important questions around biodiversity, resources, food consumption and even the ecosystem within us (our microbiota), and to upgrading human perception to minimize the sensorial gaps that keep us separated from a more sustainable future. As a neuroscientist, I want to apply the multisensorial model of perception to rewire human senses, thereby improving our relationship with food and the environment and translating that knowledge to a more understandable language so that people can upgrade their sensorial self-knowledge to improve human-food interplay.

I devised my platform Sensorytrip as a bridge to connect knowledge to people. It’s not just to guide consumers to make healthier food choices or to overcome gastronomic racism, it’s also to create a channel of communication with artists and gastronomes who can apply the state of the art to create unique experiences or to explore deeper ways of perception. For instance, it could be interesting for a chef to understand which sensory clues rule when someone enjoys a dish or to unravel the eating preferences that make each diner different.

Unlike other educational approaches to improving human-food or human-environment interactions, multisensory learning taps into the most ancestral roots of the human experience: it is through the five senses that the brain builds and interprets reality. That’s why this approach is so powerful for shaping human behavior. What we are is defined by the pathways of our perception.